文章內容

Artificial Neural Network (ANN)

❐ Neural Network (NN)

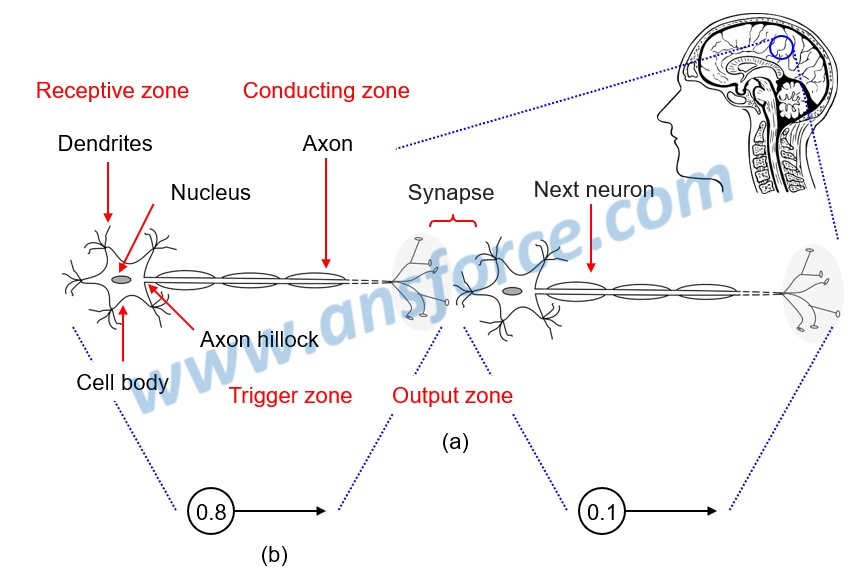

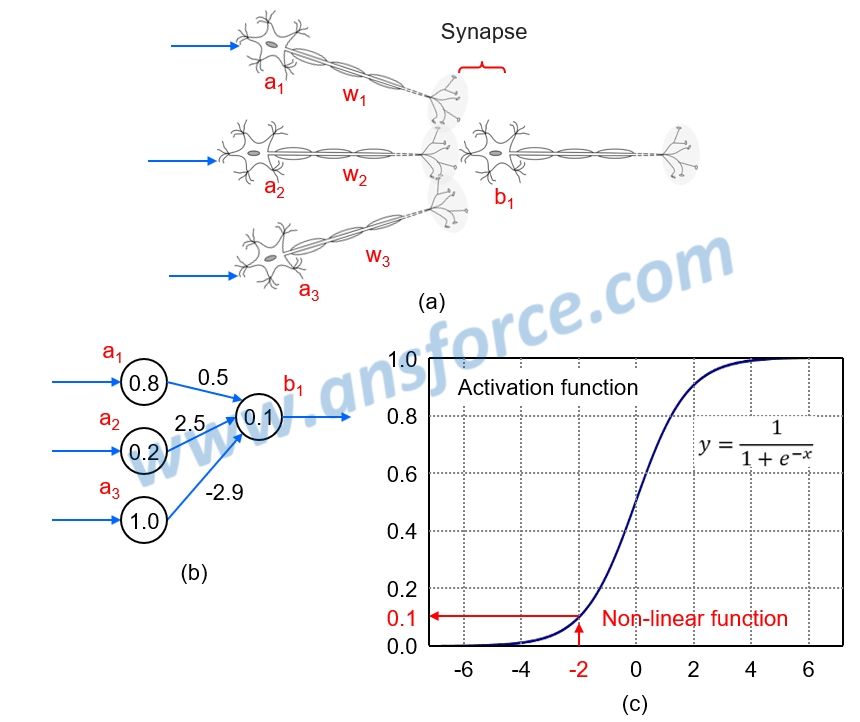

The neural network of human brain is composed of neurons. There are about 86 billions of neurons in a brain. The neuron is used to perceive the environmental variation and transmit messages to other neurons. The basic structure comprises: cell body (including nucleus), dendrites and axon, as shown in Fig. 1(a):

➤ Synapse: A junction of communication between neurons, muscle cells or gland cells used for transmission of bioelectric current or chemical substances, like dopamine, acetylcholine

➤ Receptive zone: The portion from dendrites to cell body used for receiving bioelectric current or chemical substances; the more the receiving sources are, the larger influence to the potential of cell body is.

➤ Trigger zone: Axon hillock located at the intersection of synapse and cell body as a start point for determining whether to generate nerve impulse.

➤ Conducting zone: A portion of axon for conduction of nerve impulses.

➤ Output zone: The purpose of nerve impulse is to enable synapse to transmit bioelectric current or chemical substances to affect the next neuron, muscle cell or gland cell.

Figure 1: Neural network of human brain.

❐ Artificial Neural Network (ANN)

Artificial Neural Network (ANN) may be called a neuron network, which is a mathematical model created for emulating the structure and functions of biological neural network that is used to evaluate functions or approximate calculation and is the most popular model used by Artificial Intelligence. In order to emulate the neural network of the human brain, scientists proposed the Hebbian theory, which is a neuroscience theory for explaining the variation of brain neurons in the learning process. The repetitive stimulation from a neuron on a synapse to the next neuron may result in the enhancement of synapse transmission performance, that is, the “weight” of artificial neuron network.

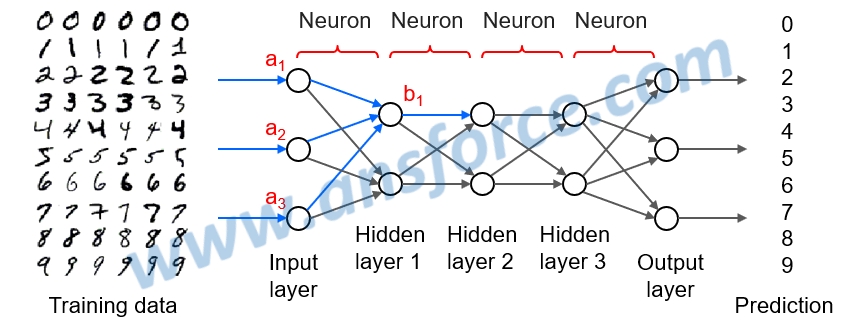

We may simplify the very complicated neurons of human brain as a circle and an arrow, as shown in Fig. 1(b), wherein the number in a circle indicates the strength of neural impulse of this neuron and the number beside an arrow indicates the synapse transmission performance of this neuron, that is, the “weight.” Then, the complicated neural network in the brain may be simplified as artificial neural network, which are connected level by level, as shown in Fig. 2. Making an example of recognition for handwritten numbers, the “input level” is the handwritten numbers, and the output level is the number 0~9 as the recognition result.

Figure 2: Diagram of Artificial Neural Network (ANN).

❐ Single Layer Perceptron (SLP)

In 1958, Frank Rosenblatt invented the perceptron, which was the first neural network precisely defined with algorithm through the weight of the minimized single layer network by Hebbian theory, and also the first mathematical model possessing the self-learning capability becoming the ancestor of the following artificial neural network model. The operation principle of neural network model is rather simple: when the electric signal received by a neuron from the previous neuron reached a “threshold point”, a nerve impulse will be generated and the electric signal will be transmitted to the next neuron at the same time. The calculation process is as follows:

➤ Sum of Product (SOP): The signal strength received by the next neuron (b1) is the sum of the neurons on this layer (a1/a2/a3) after weighting (w1/w2/w3), as shown in Fig. 3(a).

➤ Activation function: The purpose of perceptron using Activation function is to introduce the non-linearity, as shown in Fig. 3(b). The common activation functions are Sigmoid function, hyperbolic function (tanh), Rectified Linear Unit (ReLU), etc. If an ANN did not use the activation function, the linear combination of inputs fro the previous neurons will be used as the output of this neuron, and the output and the input will be always a linear relationship. Then, the deep neural network will be meaningless.

.jpg)

Figure 3: SLP and Activation function.

❐ Neural network model

The neurons of human man will intensify the transmission between synapses due to repeated learning, just like enlarging the weight of ANN described herein, so this classification method is referred to the principle of neural network of human brain. The operation of neural network model is shown in Fig. 4(a). It is assumed that the neurons (a1/a2/a3) on this layer are connected to the next neuron (b1) and have the weights (w1/w2/w3), respectively, so the SOP of the neural impulse strength of the next neuron (b1) through the Perceptron is:

b1=w1×a1+w2×a2+w3×a3=0.5×0.8+2.5×0.2+(−2.9)×1.0=−2.0

As shown in Fig. 4(b), converting -2.0 through Sigmoid function to obtain the output, 0.1, as shown in Fig. 4(c).

Figure 4: Operation of Neural network model.

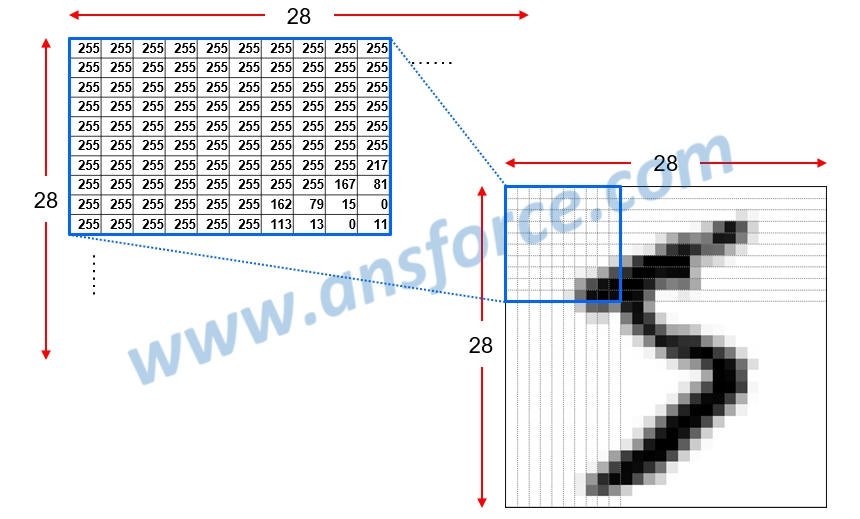

❐ Training computer for handwriting recognition through ANN

All scientists in pattern recognition field must share one standard pattern database to make sure they can develop better algorithms, but not a good lock that the data is just happened to be easily interpreted. Thus, many scientists use the MNIST database (Modified National Institute of Standards and Technology). Making an example of handwriting recognition, each number in MNIST database is a gray-level picture in 28x28 = 784 pixels. Each pixel may be converted to a number between 0~255, wherein 0 represents black, 1 represents brighter gray, 2 represents more brighter gray, and so on, and 255 represents white, as shown in Fig. 5. There are totally 70k pictures and each picture for handwritten number may have a standard answer corresponding to the correct number. Therefore, this method belongs to the “Supervised learning.”

Figure 5: Gray-level picture in 28x28 = 784 pixels for each number in MNIST database.

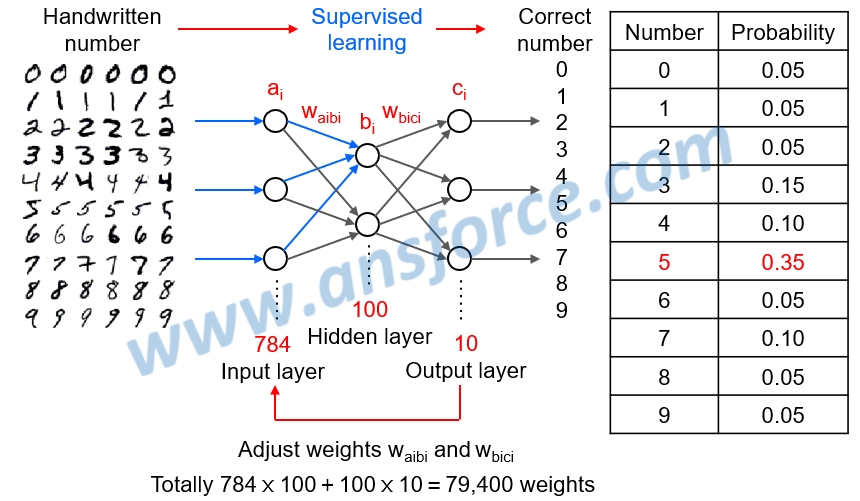

Because each number has 28x28 = 784 pixels, the number of each pixel is one dimension for input layer, and thus the input layer has totally 784 dimensions. It is assumed that the hidden layer is configured to have 100 dimensions, and the output layer only has totally 10 dimension for number 0~9, as shown in Fig. 6.

➤ Input layer: Each number has 784 pixels, totally 784 dimensions (a1~a784) as inputs.

➤ Hidden layer: Assumed the hidden layer is configured to have 100 dimensions (b1~b100).

➤ Output layer: Only number 0~9, totally 10 dimensions (c1~c10) as outputs.

Figure 6: Operation for Handwriting recognition of Neuron network model.

Except for the dimension of each layer, we need to take care the “weight” between layers. There are totally 784x100+100x10=79,400 weights in this example. We can calculate the 100 dimension (b1~b100) of the hidden layer:

b1=w(a1b1)×a1+w(a2b1)×a2+w(a3b1)×a3+……+w(a784b1)xa784

b2=w(a1b2)×a1+w(a2b2)×a2+w(a3b2)×a3+……+w(a784b2)xa784

and so on,

b100=w(a1b100)×a1+w(a2b100)×a2+w(a3b100)×a3+……+w(a784b100)xa784

Then, calculate the 10 dimensions (c1~c10) of the output layer:

c1=w(b1c1)×b1+w(b2c1)×b2+w(b3c1)×b3+……+w(b100c1)×b100

c2=w(b1c2)×b1+w(b2c2)×b2+w(b3c2)×b3+……+w(b100c2)×b100

and so on,

c10=w(b1c10)×b1+w(b2c10)×b2+w(b3c10)×b3+……+w(b100c10)×b100

❐ Error Back Propagation (EBP)

The neurons of human man will intensify the transmission between synapses due to repeated learning, just like enlarging the weight of ANN described herein, so this classification method is referred to the principle of neural network of human brain. Each weight may be larger or smaller after differentiation calculation, so the errors of input layer and output layer will be smaller. After repetitively adjusting the 79,400 weights, i.e. all w(aibi) and w(bici), the errors of input layer and output layer will be smaller. In the example in Fig.6, the inputted handwritten number is “5.” The 79,400 weights must be repetitively adjusted to achieve the highest probability of 0.35 for outputting the correct number “5.” This concept is similar to that a manager may make the judgment based on the information provided by the employee. The correct information is provided from the lower side (employee) to the upper side (manager), i.e. back propagation. The correction will be done from the upper side (manager) to the lower side (employee), and the judgment will gradually become correct.

❐ Training and prediction of Neural network

Now, you might feel your head swimming, but it is not over yet. The learning and prediction process of neural network is far more complicated than the description above.

➤ Training stage: Training is performed by inputting a large amount of data for known answers. Whenever an error occurred in the output result, the 79,400 weights will be adjusted and this will be repetitively performed until the output result approaches the standard answer. It may possibly take few seconds or possibly few days, for example, inputting 70,000 pictures of handwritten numbers that all have the standard answers corresponding to the correct numbers, and adjusting the 79,400 weights, i.e. w(aibi) and w(bici), once any error is occurred in the output result for any one picture until the errors of output and input become the minimum.

➤ Prediction stage: Inputting new data different from the training stage and outputting the result after calculation with the already adjusted 79,400 weights, which would be the prediction result of this artificial neural network. It may be completed instantly and the precision depends on the weights derived during training stage.

It can be seen from the example above that the training and prediction of neural network is similar to the learning and judgment process of human being. We usually take a long time to learn (train), and then the judgment (prediction) will be completed instantly once the learning is done.

❐ Model of machine learning

Data science is a science for data collection, data comprehension, data analysis, and data prediction. The mathematical or probability rules existed among different variables within data are called “model,” which may be applied in many fields. For example, for a Go game, we can utilize a model for analyzing the past games and use the past games to evaluate the winning percentage for each player. The model must be established according to the rules of Go game, probability theory, and basic assumptions.

Machine learning is to establish a model through data analysis and use the model to predict the result. Someone called this technique as Data mining or Predictive modeling. For example, it can be used to predict if an email is a junk email or predict if an online transaction is a fraud.

In order to establish a model, we must select associated “feature values,” called “feature selection,” The feature value is the “variable” used by machine learning during input and its value is quantitative to exhibit the features of a target. The precision will be much influenced by the differences of selected feature values. For example, the handwriting recognition must adjust the center and size of handwritten characters, but not to divide the handwritten character into many pixels and then input into the artificial neural network.

【Remark】The aforementioned contents have been appropriately simplified to be suitable for reading by the public, which might be slightly differentiated from the current industry situation. If you are the expert in this field and would like to give your opinions, please contact the writer. If you have any industrial and technical issues, please join the community for further discussion.